Some Serverless Gotchas

Dec 11, 2020

As it’s been in the news recently, I thought it would be timely to list a few things to watch out for when working with serverless services, serverless-es? Sssserverless? Serverlaas? Servern’ts? Anyway, I figured we should start with execution patterns and how they can go wrong.

Brief Into to Serverless #

In the interest of accessibility I want to make sure that everyone has an idea what serverless is, or at least how I explain it to myself. Feel free to skip this section.

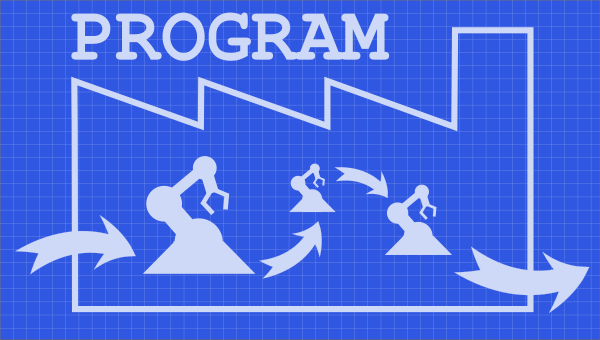

I tend to do better when I break my world down into analogies so I’ll try to do the same here. I like to think of a software project as a factory. Factories take in raw stuff and put it through a series of transformations to produce a desirable output. The internal machinery of the factory consists of two primary components. The equipment that transforms the raw material and the conveyor system that moves material between pieces of equipment. In this analogy the equipment that performs those transformations are functions and the conveyor system is the scaffolding that the functions exist in that determine when and how often those functions are called and with what arguments (materials). At some point during the cloud explosion it became apparent that a lot of our work turned out to be placing our factories inside of somebody else’s larger factory. This made sense initially as the patterns were all well understood, but over time people started to notice the inefficiencies about this pattern.

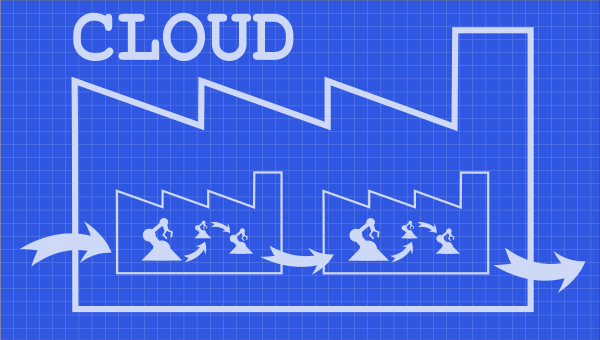

Let’s say I have several factories and inside each of my factories I have a machine that takes long wooden boards and cuts them into squares. This works pretty well as I already have the machine and it’s relatively easy for me to copy it from one factory to another. But now let’s say I have ten factories or a hundred and one of my engineers discovers a flaw in the square cutting machine. How do I upgrade this square cutting machine across all the factories? Applying an update to the square cutting machine everywhere would take time and introduces the possibility for errors. What if one of the factories has modified their square maker and hasn’t told anyone? I could take the square cutting machine out of all of my factories and put it into another factory that just does square cutting, but now all of my factories are relying on one factory to cut squares. What I really want is a service that will take a definition of a square cutting machine, and then put a copy of that square cutting machine everywhere I need it to go. This is essentially what serverless is. You define the machine and then tell the service where you need the copies to go and when.

Obviously it’s more complicated than that, and you can absolutely argue that different decisions made at different points in your project’s evolution might reduce or remove some of the issues above. But I feel that’s the best laid plans argument, and if doing things the right way the first time was easy or obvious, serverless probably wouldn’t be a thing? Regardless it is a thing now, the concept of having only replicators and not having your machines inside a factory at all is being explored as a method of delivering products.

So then the biggest thing that changes when shifting to serverless is the conveyor system. In a traditional software project, the conveyor is for loops, if else statements, the conditionals and flow controls that serve as the vascular system for your program. With serverless that pattern changes to a response to events you define. The serverless system has a definition of your machine and uses events from various sources to determine where and when they are needed. But when you have a machine replicator that can replicate an infinite number of copies and put them anywhere at any time, there are a few things you need to keep in mind to avoid blocking out the sun with a mountain of paperclips.

Going Wrong #

Storage Events #

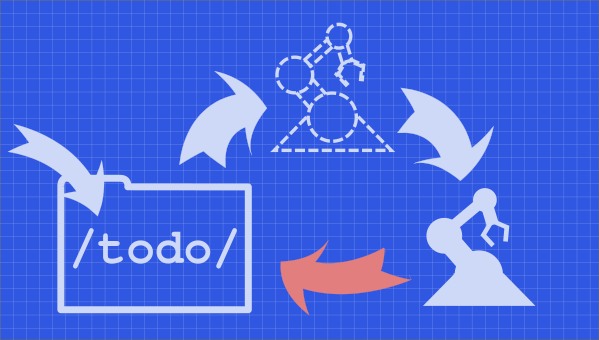

One of my favorite sources of events is in response to storage changes. Users uploading a new profile picture, or a new score entered into a game score table. Teachers submitting grades. Students submitting homework assignments. Etc. But there are a few ways this can go sideways fast. Let’s say you have events being sent to your machine replicator based on new files being uploaded into a bucket, into a folder titled /ToProcess/. Someone uploads a file, your replicator builds a copy of your machine to handle the event and your machine puts the result into /Processed/. Does putting the result into a different folder in the same bucket cause a replicator event?

On AWS, uploading new things into an S3 bucket can be used as replicator events. That event’s configuration does allow you to set filters so that only files placed into certain folders trigger replicator events. But we’re talking about something as trivial as a folder name between you and infrastructure proliferation. I’ve asked AWS S3 experts and they’ve all told me the same thing, even though there is a filter on the event trigger, don’t use it. Best practice is to have the event producing bucket and result buckets be completely separate to avoid something as small as a folder rename causing runaway replication.

This also makes it easier to move to a platform that doesn’t have a filter mechanism at all, like GCP Cloud Storage. Cloud Storage causes a machine replication with every save action, and it’s up to your machine to determine if work needs to be done or not. Then the people that have knowledge of the event and trigger mechanisms are not always the same people that have access to the storage buckets, and none of the storage buckets have “I have triggers configured” indicators anywhere. I would always err on the methods that have smaller landscapes of possible mistakes because over a long enough period of time, all mistakes will be made. Better there are fewer to start.

Queue Consumers #

Having serverless functions respond to queue events is a common pattern. A user orders a product that goes on a queue. A user runs a long running report that goes on a queue. Email queued up to send to thousands of customers. Etc. So how can this go wrong? Do you understand all the behaviors of your queue? Does the queue have some sort of success/fail retry system? What causes a queue event to fail? If it fails does it go back on the queue? How many times does it end up back on the queue?

If the source of your events is the queue then consider every possible circumstance that can put an event on that queue. In some instances the queue itself might be the source, like an improperly exited function being interpreted as a failure, causing the original event to be placed back on the queue, causing another machine to be copied to process the same event over and over.

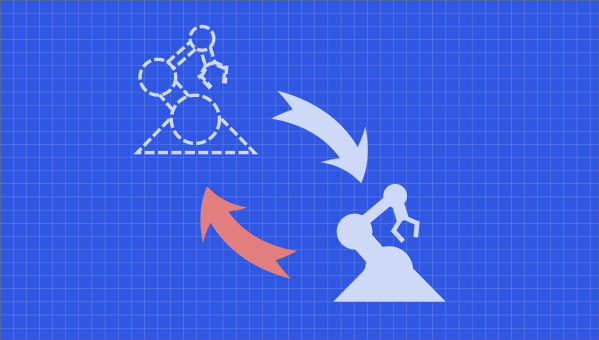

Recursion #

Machines that ask for more copies of themselves. Don’t do this. You will eventually consume all matter on earth and end up with too many paperclips. I would say even if you have a good use case, put a queue or some other event source between your replicator events. It’s best to treat cloud compute as something you can accidentally misconfigure and end up turning off the sun.

Platform Quirks #

Cloud Run on GCP is meant to respond to an http request as it’s input event and as such wants to live entirely within the lifecycle of a reasonable web request. As a result, your instance has a limited amount of time to respond to the http event that triggered it. Then after the event has been responded to, your replicated http responder has the power turned down to a minimum and is set to the side as it shuts itself down.

Each serverless solution focuses on particular types of problems. Cloud Run responds to web requests, Lambda to events described by JSON documents. Be sure to checkout the intended use cases and make sure that there is alignment between the service and your goals. If you find alignment doesn’t come relatively easily I would spend some time shopping for the cloud service with the least friction.